29

MAR 2021

Data Mining and Litigation (Part 1)

Posted by Carl McClain | Big Data, Data Analytics, Economics, Statistical AnalysisData Mining is one of the many buzzwords floating about in the data science ether, a noun high on enthusiasm, but typically low on specifics. It is often described as a cross between statistics, analytics, and machine learning (yet another buzzword). Data mining is not, as is often believed, a process that extracts data. It is more accurate to say that data mining is a process of extracting unobserved patterns from data. Such patterns and information can represent real value in unlikely circumstances.

Those who work in economics and the law may find themselves confused by, and suspicious of, the latest fads in computer science and analytics. Indeed, concepts in econometrics and statistics are already difficult to convey to judges, juries, and the general public. Expecting a jury composed entirely of mathematics professors is fanciful, so the average economist and lawyer must find a way to convincingly say that X output from Y method is reliable, and presents an accurate account of the facts. In that instance, why make a courtroom analysis even more remote with “data mining” or “machine learning”? Why risk bamboozling a jury, especially with concepts that even the expert witness struggles to understand? The answer is that data mining and machine learning open up new possibilities for economists in the courtroom, if used for the right reasons and articulated in the right manner.

Consider the following case study:

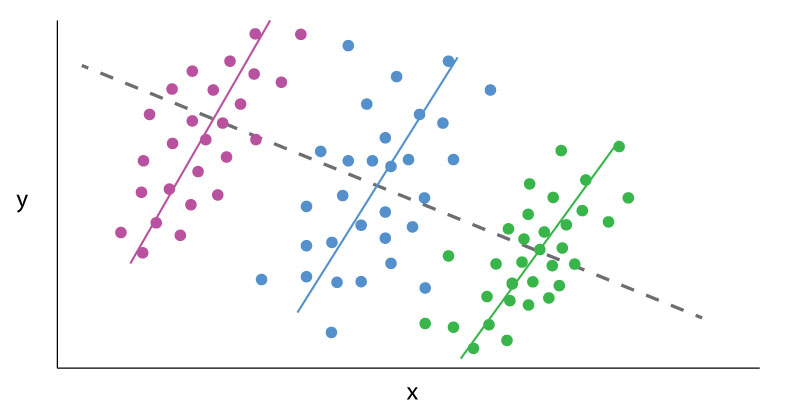

A class action lawsuit is filed against a major Fortune 500 company, alleging gender discrimination. In the complaint, the plaintiffs allege that female executives are, on average, paid less than men. One of the allegations is that starting salaries for women are lower than men, and this bias against women persists as they continue working and advancing at this company. After constructing several different statistical models, the plaintiff’s expert witness economist confirms that the starting salaries for women are, on average, several percentage points lower than men. This pay gap is statistically significant, the findings are robust, and the regressions control for a variety of different employment factors, such as the employee’s department, age, education, and salary grade.

However, the defense now raises an objection in the following vein: “Of course men and women at our firm have different starting salaries. The men we hire tend to have more relevant prior job experience than women.” An employee with more relevant experience would (one would suspect) be paid more than an employee with less relevant prior experience. In that case, the perceived pay gap would not be discriminatory, but a result of an as-of-yet unaccounted variable. So, how can the expert economist quantify relevant prior job experience?

For larger firms, one source could be the employees’ job applications. In this case, each job application was filed electronically and can be read into a data analytics programs. These job applications list the last dozen job titles the employee held, prior to their position at this company. Now the expert economist lets out a small groan. In all, there are tens of thousands of unique job titles. It would be difficult (or if not difficult, silly) to add every single prior job title as a control in the model. So, it would make sense to organize these prior job titles into defined categories. But how?

This is one instance where new techniques in data science come into play.