13

OCT 2014Working with large data sets: The new CMS medial records files

Posted by Dwight Steward, Ph.D. | U.S. EconomyThe new data files released by the CMS regarding the payments made to U.S. medical doctors by drug and medical device manufacturers contains a treasure trove of information. However, the large size of the data will limit the use and the nuggets that can mined for some.

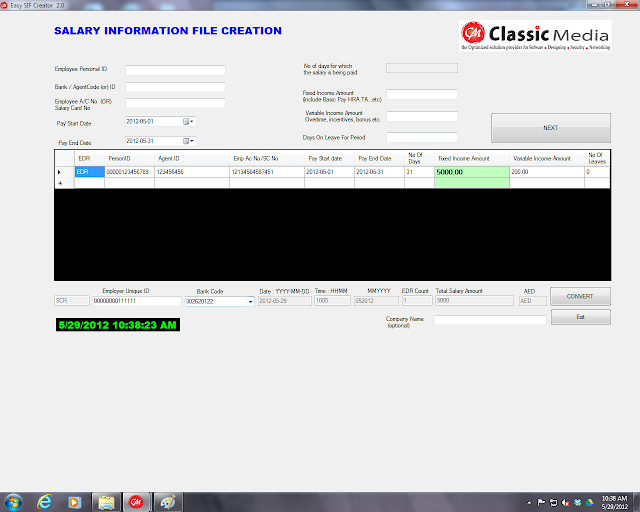

Using the statistical program STATA, which is generally one of the fastest and most efficient ways to handle large data sets, required an allocation of 6G of RAM memory to just read in the program. STATA is efficient at handling large wage and hour, employment, and business data sets (like ones with many daily prices)

The table below shows what STATA required in terms of memory to be able to read the data:

Current memory allocation

current memory usage

settable value description (1M = 1024k)

——————————————————————–

set maxvar 5000 max. variables allowed 1.947M

set memory 6144M max. data space 6,144.000M

set matsize 400 max. RHS vars in models 1.254M

———–

6,147.201M

When manual data entry of non-analyzable financial or wage data is not an option, OCR software and specialized designed and written computer software data cleaning routines is a good alternative.

When manual data entry of non-analyzable financial or wage data is not an option, OCR software and specialized designed and written computer software data cleaning routines is a good alternative. Some wage and business data is electronic but is not analyzable in the format that it is maintained by the employer or company.

Some wage and business data is electronic but is not analyzable in the format that it is maintained by the employer or company.