Data analytics is only beginning to tap into the unstructured data which forms the bulk of everyday life. Text messages, emails, maps, audio files, PDF files, pictures, blog posts, these sources represent ‘unstructured data,’ as opposed to the structured data sources mentioned thus far. Up to 80% of all enterprise data is unstructured. So, how can a client’s text messages or recorded phone calls be analyzed like a SQL table? Unstructured data is not easily stored into pre-defined models or schema; some CRM tools (e.x. Salesforce) do store text-based fields. But typically, documents do not lend themselves to traditional queries from a database. This does not mean ‘structured’ and ‘unstructured’ data are in conflict with each other.

Document based evidence is of course, an integral part of the legal system. Lawyers and law offices now have access to comprehensive e-discovery programs, which sift through millions of documents based on keywords and terms. Selecting relevant information to prove a case is nothing new. The intersection with Data Analytics arises when hundreds of thousands or millions of text based data are analyzed as a whole, to prove an assertion in court.

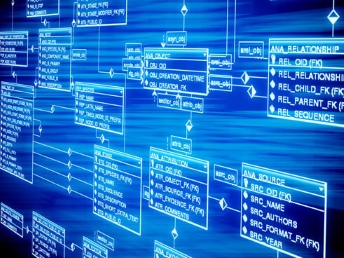

Turning unstructured text into analyzable, structured data is made possible by increasingly sophisticated methods. Some machine learning algorithms, for example, analyze pictures and pick up on repeating patterns. Text mining programs scrape PDFs, websites, and social media for content, and then download the text into preassigned columns and variables. Analyses can be run, for example, on the positivity or negativity of a sentence, the frequency of certain words, or the correlation of certain phrases to one another. Natural language processing (NLP) includes speech recognition, which itself has seen significant progress in the past two decades. Analytics on unstructured data is now more useful in producing relevant evidence.

As important as the unstructured data is its corresponding Metadata: data that describes data. A text message or email contains additional information about itself: for example the author, the recipient, the time, and the length of the message. These bits of information can be stored in a structured data set, without any reference to the original content, and then analyzed. For example, a company has metadata on electronic documents at specific points in a transaction’s life-cycle; running a pattern analysis on this metadata could identify whether or not certain documents were made, altered, or destroyed after an event.

In instances of high profile fraud, such as the London Inter-bank Offered Rate (LIBOR) manipulation scandal, prolific emails and text messages between traders added a new dimension to the regulator’s cases against major banks. Overwhelming and repeated textual evidence, which can be produced through analyses on unstructured data, is yet another tool for litigating parties to prove a pattern of misconduct.